Can LLMs save journalism?

Joe Rogan, Bernie Sanders, and the Washington Post headline that sparked a deeper reflection on media incentives, LLMs, and the collapse of trust in journalism.

TL;DR

Last week, Joe Rogan misquoted a Washington Post article on climate change, using only the headline to push a misleading narrative. It wasn’t just a fluke—it was a reflection of a media ecosystem that rewards outrage and obscures nuance. What if LLMs could help us reclaim the substance beneath the clickbait—and even reshape how we value knowledge itself?

The episode that sparked this

I was watching the recent Joe Rogan Experience episode with Bernie Sanders—an unlikely pairing. Rogan, often associated with more right-leaning, libertarian takes, hosting the democratic (independent) socialist senator from Vermont. Naturally, I was curious.

Their conversation bounced from inequality to elite power structures to, you guessed it, climate change.

That’s when things got interesting.

Rogan brought up a Washington Post article. He paraphrased the headline as something like:

“The Earth is in one of its coldest periods in 200 million years.”

And with that, he confidently dismissed global warming as overblown fear-mongering. His point: if even the left-leaning Washington Post is saying this, doesn’t it prove climate change is a hoax?

Bernie Sanders hadn’t read the article, so he didn’t challenge the framing. And just like that, the moment passed. But for millions of viewers, it landed as a mic drop.

“Rogan OWNS climate activists with THEIR OWN data.”

Except… that’s not what the article said.

Behind the headline

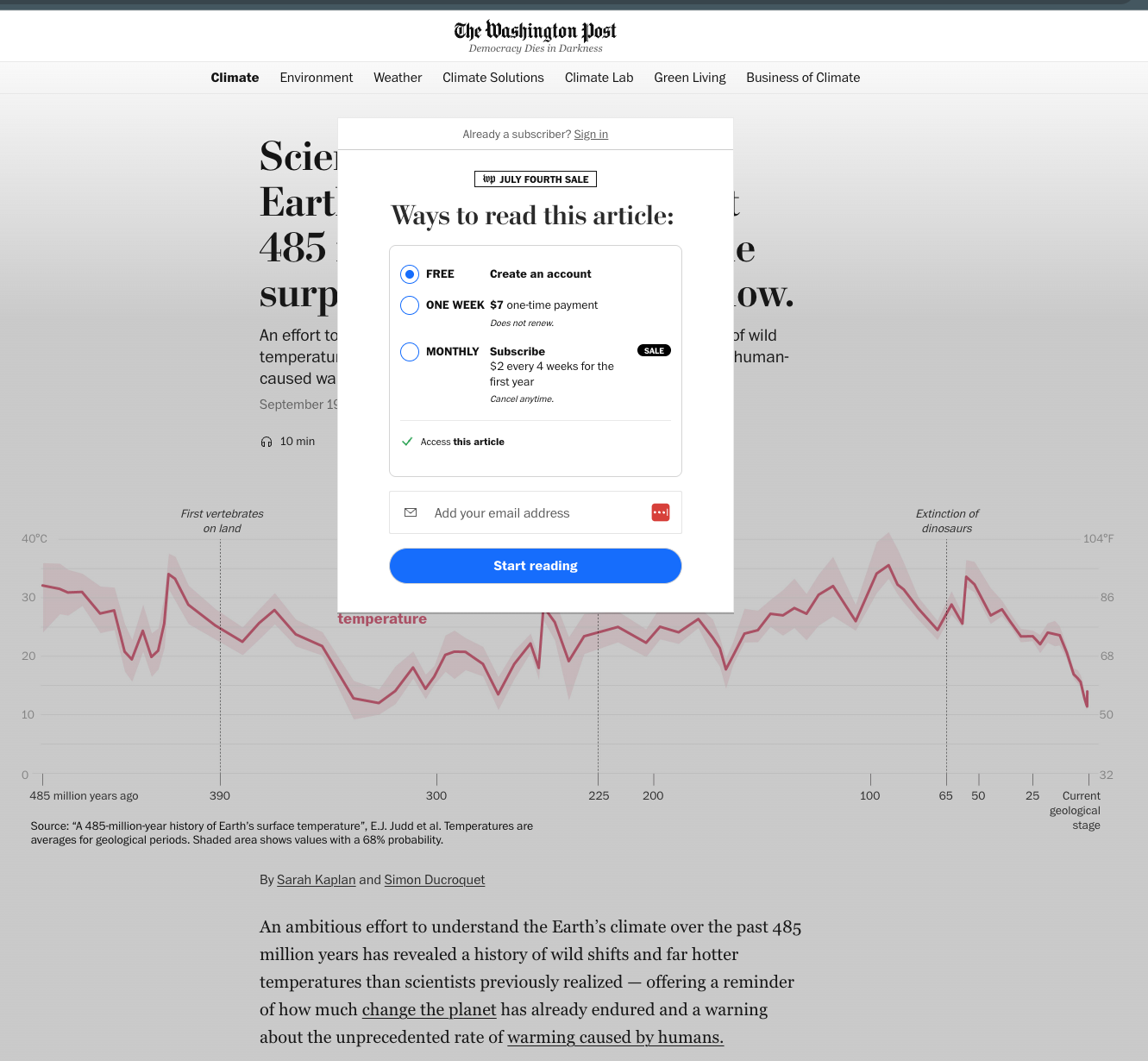

Curious, I tried to find the article myself. It was behind a paywall.

All I could access was the headline and this graph

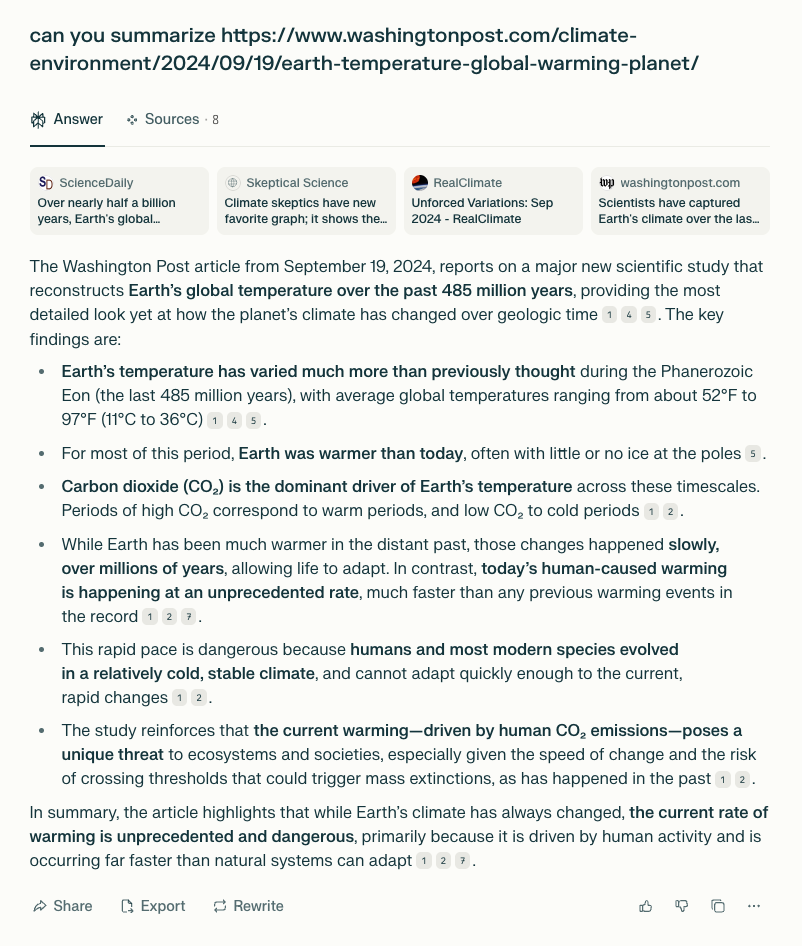

So I turned to an LLM-powered search. Within seconds, I was reading a summary of the full article’s actual argument:

The article's actual argument

Yes, we are technically in a cooler era geologically speaking. But the key point was this: the current rate of warming is unprecedented, and human life as we know it only emerged during this cooler epoch. The hotter past was dominated by dinosaurs and massive reptiles, not human civilizations.

In other words: the article wasn’t downplaying global warming. It was underlining the risk.

The headline didn’t just obscure the story, to some extent it inverted its meaning.

Why this keeps happening

This wasn’t a one-off. It’s how the media works now:

- Headlines are optimized for virality, not clarity.

- Articles are locked behind paywalls.

- Podcasts, tweets, and TikToks amplify the headline, not the nuance.

Joe Rogan isn't a journalist. He’s not held to standards of rigor or correction. But he has a bigger reach than most journalists will ever have. And when even a politician like Bernie Sanders can’t respond on the spot, it’s clear: we’ve built a system where clickbait wins.

It’s not just about climate change. It’s about trust.

When the public can’t access the nuance and when our loudest voices distort it with impunity, what hope do we have of an informed democracy?

Enter LLMs—quietly, powerfully

Here’s where things get interesting.

I didn’t have to be a subscriber. I didn’t need to sift through geology papers. An LLM helped me:

- Understand the actual content of the article

- Separate headline spin from core message

- See how the data was being misrepresented

More importantly: I could interrogate it. I could ask follow-up questions, challenge its logic, ask what was missing.

LLMs gave me contextual access. Something even Bernie didn’t have on stage.

A new model for journalism?

What if this isn’t just a reading superpower but a societal shift?

Today’s media economy rewards attention. But LLMs could enable a model where we reward contribution to understanding.

Imagine a system where:

- Articles are evaluated not by traffic, but by how much new signal they add to the global corpus

- Researchers and journalists are compensated for advancing public discourse, not just for clicks

- LLMs act as judges of incremental insight, able to highlight and promote the most thoughtful work

This isn’t science fiction. The tools exist today. What’s missing is the incentive structure.

A brighter path forward

We don’t need more outrage. We need a modern town square where people can engage with facts, not just spin headlines like weapons. And you need more than 140 characters to do it, sorry "x formerly known as twitter".

And maybe, just maybe, LLMs are the infrastructure for that future. Not as the final say, but as the medium that finally breaks the tyranny of the headline.

What if instead of suing each other, The New York Times and OpenAI came together to build that system?

What if AI could reward journalism that matters?

What if trust isn’t dead but just waiting to be rebuilt with better tools?

I'm well aware this is a very techno-optimistic take on the future of journalism but we still have a chance to build that future. Before Joe Rogan headlines become our only truth.