Beneath the Iceberg: Why AI Exposure Isn't Job Displacement

The MIT Iceberg Report found 11.7% of U.S. wage value is technically automatable, but headlines got it wrong. AI replaces low-agency tasks, not people. Jobs evolve, they don't disappear.

Beneath the Iceberg: Why AI Exposure Isn't Job Displacement

TL;DR: The MIT Iceberg Report found that 11.7% of U.S. wage value corresponds to tasks that existing AI systems are technically capable of performing. Headlines flattened this into "11% of jobs will be lost." But the report doesn't predict job loss, instead it maps technical exposure at the task level. When combined with Stanford's Human Agency Scale research, the real story emerges: AI replaces low-agency tasks, not people. Jobs evolve, they don't disappear.

What the Iceberg Report actually measured

The MIT and Oak Ridge National Laboratory Iceberg Index attempts something unusually sophisticated: it maps 151 million U.S. workers across 923 occupations, 32,000 individual skills, and 13,000 AI tools to measure task-level technical exposure to AI.

The key phrase here is technical exposure.

The authors explicitely state:

"The Index captures technical exposure, where AI can perform occupational tasks, not displacement outcomes or adoption timelines."

This is foundational. The report is not predicting job loss. It is mapping areas where AI could perform tasks if employers choose to adopt it, and if sociotechnical conditions allow it.

The headline number (11.7 percent)

The report finds that:

"11.7 percent of U.S. wage value corresponds to tasks that existing AI systems are technically capable of performing."

That 11.7 percent represents approximately $1.2 trillion of wage value that is potentially automatable at the task level using current AI models.

The report distinguishes this hidden exposure from the highly visible automations in software development and technical domains:

"Visible adoptions concentrate in software and IT. Hidden exposures extend far below the surface across administrative, financial, HR, logistics, and professional services work."

This is why the report is called "Iceberg". Most of the at-risk tasks sit beneath the surface, not in the obvious places.

The methodology

Rather than relying on job titles, the report builds a skills-based simulation:

- Each job is decomposed into its underlying tasks

- Each task is mapped to required skills

- Each skill is matched to whether any AI system can currently perform it

This is a "task exposure model" rather than a "job automation model". It's the same methodological shift that OpenAI's and Anthropic's recent "task decomposition" papers have taken.

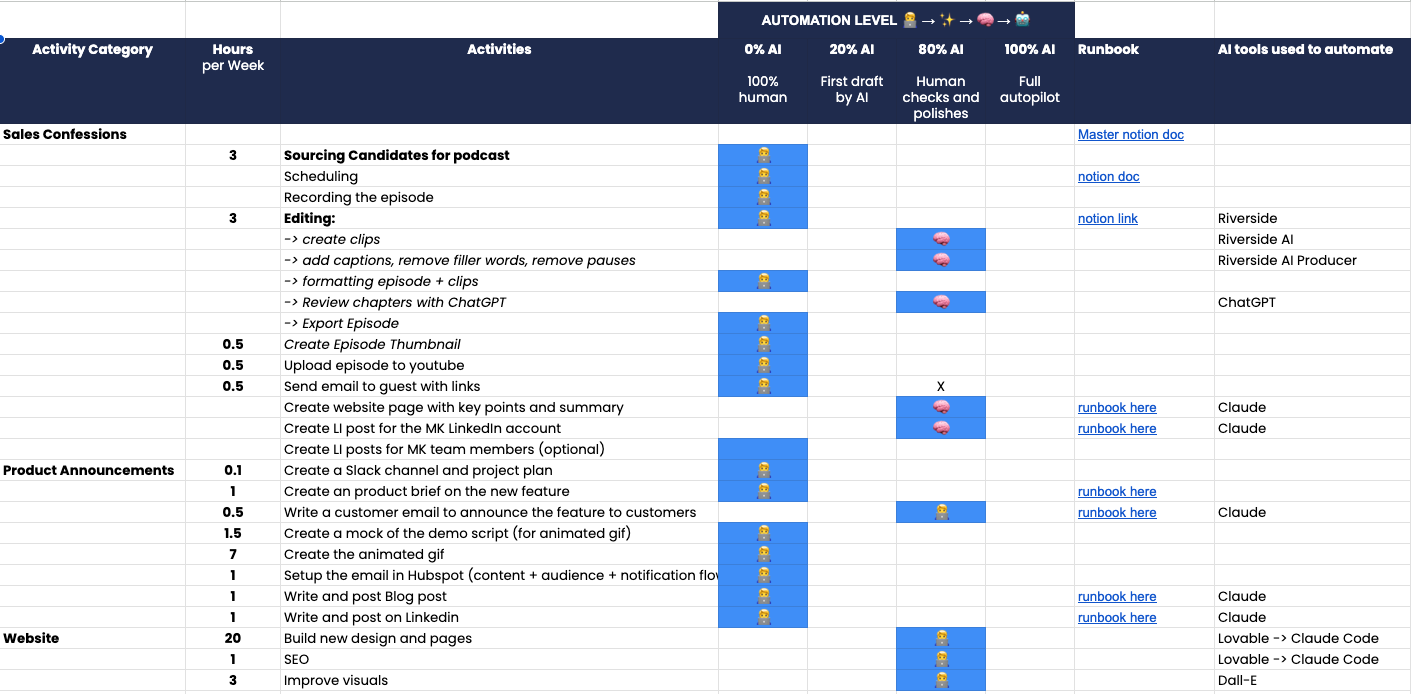

This is very cool and actually well aligned with the model I share internally at HG Insights to determine which tasks can be automated.

Task decomposition model

What the report does not imply (despite headlines)

The media flattened this into: "11 percent of jobs will be lost to AI." But the report emphatically does not say that. The authors stress repeatedly that exposure is not displacement:

"Technical capability does not necessarily imply adoption, nor does exposure imply job loss."

"Our results quantify potential automation capacity, not labor market outcomes."

"Organizations face substantial frictions—managerial, legal, regulatory, cultural—that mediate any transition from capability to adoption."

This is exactly why the "11 percent jobs lost" headline is misleading: the report does not model layoffs, organizational restructuring, net job effects, or job creation.

No timeline. The authors never assign a timeline to the 11.7 percent exposure figure. Media outlets fill that void with their own narratives.

No claim of mass unemployment. Unlike older automation studies (e.g., Frey & Osborne 2013), this report avoids any prediction of how many jobs will vanish or by when.

No assumption of frictionless employer adoption. The report actually states that most organizations struggle to adopt even basic automation.

No prediction of AGI. It is strictly grounded in current AI systems, not speculative future models.

The nuance: what the Iceberg results actually mean

I find that this is where the Stanford Human Agency Scale (HAS) framework makes the story clearer. The Iceberg report shows where AI can technically perform tasks. The Stanford HAS framework explains which of those tasks organizations are actually likely to automate.

skill ranking

Iceberg shows what tasks AI can do

These are primarily low-agency information-processing tasks:

- Analyzing data

- Updating and retrieving information

- Documenting

- Monitoring resources

- Routine evaluation or compliance checks

- Basic communication routing

- Structured workflows

This aligns with the HAS skill ranking: "Analyzing Data or Information" ranks high in wage but low in required human agency. "Documenting/Recording Information" ranks low in both wage and agency. These are exactly the tasks LLMs and agents excel at.

HAS shows which tasks matter to job resilience

HAS classifies tasks into five agency levels (H1–H5).

High-agency work (H4/H5) includes:

- Coordinating people

- Supervising, advising, motivating

- Making decisions under ambiguity

- Interpreting complex or interpersonal contexts

- Negotiating

- Providing judgment under uncertainty

These tasks do not map cleanly to current AI capabilities. As a result, much of the 11.7 percent exposed wage value belongs to H1/H2 tasks that are components of jobs, not whole jobs. This is why the report doesn't predict job loss, it maps technical exposure at the task level.

In other words:

- Jobs composed mostly of H4/H5 tasks remain structurally secure

- Jobs with large portions of H1/H2 tasks are partially exposed, not eliminated

- Many jobs will be restructured, not removed

This distinction is not captured in the headlines.

Cool story, but what does it mean for the future of work?

In my humble opinion, the Iceberg + HAS synthesis yields three big strategic insights:

1. AI will compress low-agency tasks, not eliminate jobs wholesale

Iceberg describes the capacity of AI. HAS describes human preferences, irreplaceability, and agency requirements. Together they imply:

- AI absorbs the routine portions of jobs (#noDrudgery)

- Humans shift toward coordination, leadership, creative strategy, interpersonal roles (remember the system thinking we were talking about in the talent pyramid)

2. The real disruption is inside jobs, not between jobs, and will take time

Headlines assume "11% of jobs gone." The report actually shows "X% of tasks inside most jobs could be automated."

I think it's worth combining this insight with the HAS finding that 41 percent of YC-funded AI startups target tasks workers do not want automated. They found that many automatable tasks (H1/H2) are undesirable but not socially acceptable to delegate fully.

This is why "full agents" run into resistance while ChatGPT thrives: people want collaboration, not replacement (yet).

3. The workforce of the future could be more human-centered, ironically because of AI

The combined evidence points to:

- Decreased value of pure information processing

- Increased value of system-thinking, interpersonal, managerial, strategic, interpretive skills

- Job security is tied to high-agency competencies

- A shift toward meaning-making and judgment

- AI absorbing repetitive mental load, not human agency

This is the part almost no headline captures. While the future of work might have fewer humans (1st order impact), it is unclear how a workforce, amplified by AI, will change the way we work & live (2nd order impact).

Final take

The MIT Iceberg Report does not say that 11 percent of jobs will be cut. It says that 11.7 percent of wage value sits in tasks current AI systems could technically perform. This is more of a reflection on the drudger of modern work than a prediction of job loss.

There's an untold story behind the headlines. The real disruption won't be through direct job elimination but through a radical job transformation that the foundational model companies are failing to paint. For all their talk about AGI, they are still unable to articulate why we should be stoked about 2nd order societal impacts of AI. So maybe after all they deserver the bad press. OpenAI, Anthropic, Meta, Google PR teams, time to step up your game!!